Procedure Over Intelligence: Building Reliable AI Systems

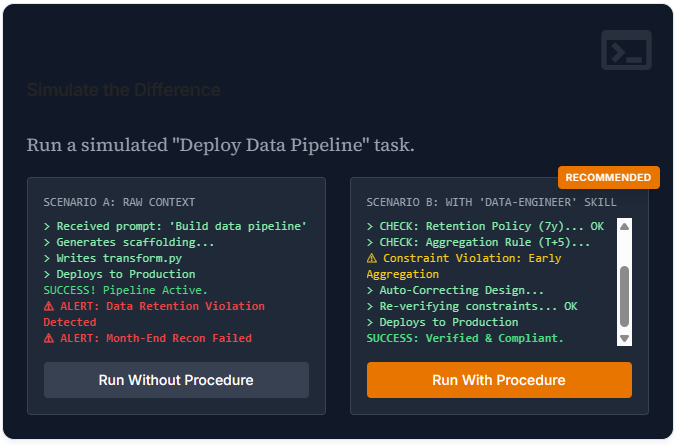

You’ve probably seen this: an AI agent scaffolds an entire data pipeline in twenty minutes. Impressive. Then the same agent, with the same context, generates a pipeline that violates data retention policy. It aggregates transaction data before month-end close. It skips the security review gate. It produces exactly the kind of code that costs someone hours to fix later.

The agent had every requirement document. Every design spec. Every technical constraint anyone could think to provide.

Context without systematic workflows is just noise.

The problem isn’t intelligence. The problem is that knowing what to build doesn’t tell you how to build it correctly, every time.

The Checklist Principle

In 2009, surgeon Atul Gawande published The Checklist Manifesto, documenting how a simple checklist reduced surgical complications by 36%. Not better doctors. Not more experience. Just a systematic way to avoid known failure modes.

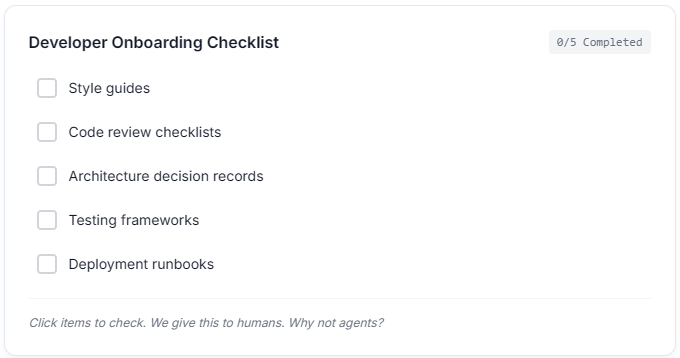

Software teams discovered this decades ago. We don’t tell new developers to “just write good code.”

These aren’t suggestions. They’re accumulated organizational wisdom from every production incident, security breach, and 3am debugging session.

But we don’t give agents any of this. We throw them requirements and hope they improvise well.

The Inflection Point: Why This Matters Now

This is the moment everything changes.

We’re moving from copilots (human-directed, tool-like assistance) to delegated agents (goal-oriented, autonomous work). That shift fundamentally changes what “reliable” means.

Research from METR (Model Evaluation & Threat Research) shows that the length of tasks AI agents can complete autonomously has been doubling approximately every seven months. Tasks requiring human oversight a year ago are now handled end-to-end. The trajectory points toward agents completing week-long projects independently within a few years.

That’s capability. But capability without guardrails is liability.

When an agent is a copilot, you’re there to catch mistakes. When it’s delegated autonomous work (handling deployments, running production pipelines, processing sensitive healthcare records), you’re not. The agent drifts from organizational constraints. Misses edge cases your team learned about painfully. Optimizes for the wrong objective.

The gap between “can execute tasks” and “executes them correctly, every time, aligned with how we actually work” is the missing infrastructure of systematic governance.

Real-World Costs of Missing Workflows

A healthcare system asks an agent to build automated lab result processing. The agent structures data efficiently, reducing storage by 40%. Later, auditors discover that the compression scheme violates HIPAA’s auditability requirements. Six months of results need reprocessing.

A financial services firm deploys an agent for month-end transaction reconciliation. It works perfectly for the standard flow. Then during actual month-end close, it skips the reconciliation validation gate during edge case handling, costing three days of manual recovery work.

Neither agent lacked intelligence. Each understood the what. Each had requirements and technical specs. Each was missing the organizational how: the systematic guardrails that encode “we learned this the hard way, and we’re not doing it again.”

Enter Agent Skills

What if you could package organizational expertise the way you package code?

Not as dense prompts or scattered documentation, but as executable workflows that agents can discover, load, and apply automatically.

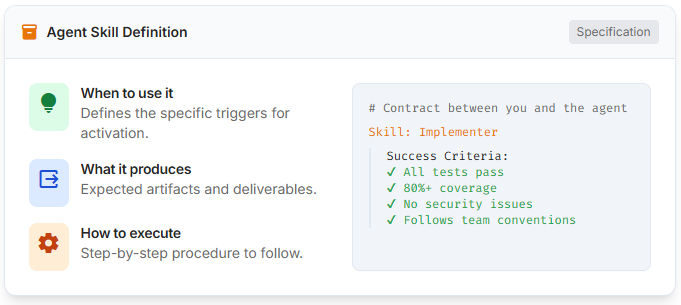

That’s what Agent Skills are: self-contained modules that encode how work actually gets done. The format emerged from the need to make agent behavior reproducible across tools and teams: a specification that any IDE or AI provider can support.

Think of it as a contract between you and the agent. Not “write me a login service” but “use the implementer skill with our team’s authentication patterns, mandatory test coverage thresholds, and mandatory security review gates.”

Context informs the agent what to build. Skills enforce how it gets built.

A Format, Not Proprietary Technology

The beauty is simplicity. An Agent Skill is just:

my-skill/

├── SKILL.md # The contract

├── references/ # Examples, templates, checklists

└── scripts/ # Optional automation

That’s it. The SKILL.md file uses standard YAML frontmatter and Markdown. Nothing proprietary. Just structured clarity:

---

name: implementer

description: Ship production-ready code with tests and docs

---

## When to Use

- Building new features

- Bug fixes requiring code changes

- Refactoring existing systems

## Core Capabilities

- Clean, modular code in any language

- Comprehensive test coverage

- Error handling and logging

- Documentation

## Workflow

1. Understand requirements

2. Design approach

3. Implement with tests

4. Document decisions

5. Verify quality gates

## Success Criteria

- All tests pass

- 80%+ coverage

- No security issues

- Follows team conventions

Readable. Versionable. Portable across tools.

Open Standard, Vendor Independent

Here’s what matters: this isn’t locked to one vendor.

Agent Skills is an open standard. It’s YAML and Markdown. No proprietary tooling, no platform constraints. Write a skill once, use it across Claude Code, Claude.ai, Gemini CLI, or any platform supporting the format.

Your team’s expertise travels with you, not with your IDE or AI provider. That independence matters for regulated industries, long-lived systems, and anyone who’s been burned by vendor lock-in before.

Skill Chains: Where Power Emerges

Individual skills are useful. Skill chains are transformative.

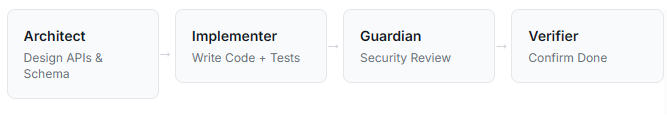

Here’s how a team builds a feature end-to-end:

brainstorming

→ (refine requirements with domain experts)

architect

→ (design APIs and schema with governance in mind)

implementer

→ (write code with mandatory test coverage)

qa-tester

→ (comprehensive test suite and edge cases)

guardian

→ (security scanning and vulnerability validation)

verification-before-completion

→ (confirm actually done, not just claimed)

Each step has clear inputs, outputs, and success criteria. Each builds on the last. Most importantly: each encodes how your organization actually works.

The architect doesn’t just design an API. It validates that requirements align with regulatory constraints. Refined requirements conflict with architecture? The chain stops. Constraint violated? Fails early and visibly. No silent drift. No surprises at 2am deployment.

This isn’t theoretical. This is accumulated wisdom from every retrospective where someone said “we should have caught that earlier.”

Governance as Code: Structural Enforcement

Enterprise concern: “How do we ensure AI agents follow our security policies and compliance requirements?”

The answer isn’t better prompts. It’s encoded constraints.

With Agent Skills, compliance becomes structural:

constraints:

- All database changes require migration scripts

- All API endpoints require authentication

- All deployments require security review

- Healthcare data access logs audit trail

- No aggregation before T+5 business days (finance constraint)

An agent using the implementer skill cannot bypass the security review gate. It’s not optional. It’s embedded in the workflow. The agent fails the skill execution if constraints aren’t met.

Healthcare learned this decades ago: move from “trust clinician judgment” to “systematic protocols that prevent mistakes.” Not because clinicians weren’t competent. Because systematic beats heroic every single time.

The Demo Trap

I’ve built enough “impressive” prototypes to know the pattern:

Day 1: “Wow, this AI just scaffolded an entire microservice!”

Week 2: “Why doesn’t it follow our logging standards?”

Month 3: “We’re spending more time fixing AI code than writing it ourselves.”

The gap between demo and production system is systematic governance.

Agent Skills don’t make agents smarter. They make them predictable. And in production systems (where you’re handling customer data, regulatory compliance, or financial transactions), predictability beats brilliance every time.

Production-Ready Skills: Real-World Templates

I’m publishing production-oriented skills across core domains as open source. These address where teams actually fail. Strategy gets unclear. Architecture gets skipped. Code ships without tests. Deployments break at midnight.

Each skill locks in a boundary that stops mistakes at that transition point:

implementer: Enforces test coverage thresholds, documentation requirements, and security review gates before code is marked complete.data-pipeline-engineer: Requires data quality checks, incremental loading patterns, and medallion architecture compliance. Catches aggregation-before-retention-window mistakes early.guardian: Runs security scans, validates error handling, checks for common vulnerability patterns.verification-before-completion: Forces agents to run actual verification commands before claiming success. Eliminates “should work” commits.

Why these domains? Because these are the places organizations bleed. Not all problems. The ones that cost you time and trust when they fail.

What Actually Changes

Without systematic workflows: You give the agent context PostgreSQL, medallion architecture, GDPR retention. It generates a pipeline. Then requirements shift. The agent “optimizes” by dropping partition strategies. Data quality checks get compressed. You discover the issue during QA, or worse, in production.

With Agent Skills: The data-pipeline-engineer skill requires data quality checks before any transformation completes. Retention constraints are encoded in success criteria. The agent cannot mark work done without them.

Context informs what to build. Workflows enforce how it gets built.

Adoption Pathway

Adoption won’t happen overnight. Teams will need to:

- Document actual workflows (not aspirational ones)

- Encode standards currently enforced via code review

- Create examples for specific tech stacks

- Version and maintain skills as practices evolve

But here’s the reality: this work is already happening. It’s just scattered in code review comments, onboarding docs new developers can’t find, tribal knowledge that walks out the door when people leave.

Agent Skills make it portable, discoverable, and executable.

The Real Inflection Point

We’re at a moment where AI capability has outpaced organizational wisdom. Agents can execute. But execution without structural constraints is a liability.

The gap isn’t intelligence. It never was. It’s systematic governance.

Every security policy you’ve written, every code review pattern your team converged on, every lesson learned from production incidents at 3am that accumulated expertise is the actual value. Not the individual knowledge. The systems you built to prevent repeating mistakes.

Agent Skills don’t automate judgment. They encode it. They make the difference between an agent that can do work and an agent your organization actually trusts to do it.

That’s the inflection point. That’s what matters now.

What Comes Next

Build workflows. Version them like code. Make them portable. Let your team’s expertise travel faster than your team can.

Intelligence is common. Systematic excellence is rare. That’s the edge.

Resources & Next Steps

- Agent Skills Specification: agentskills.io — the open standard for portable agent workflows

- Production Skills Collection: GitHub Repository — ready-to-use skills across architecture, development, data engineering, and operations

- Interactive AI Knowledge Base: Explore & Chat with the Agent Skills Collection